Introduction

Working on a data engineering team, I am often asked to troubleshoot SSIS (SQL Server Integration Services) data flow performance issues. While the usual suspects include poorly written queries (why are you using SELECT * on a table with 900 columns when you only need to return three of them?), lack of indexes (seriously, why don’t any of your tables have a primary key?), and poor SSIS package design (do you REALLY need to SORT those 80 million rows before putting them into a staging table?), an area I feel doesn’t get enough attention are the Data Flow Task and OLE DB Destination properties. Specifically, this blog focuses on Data Flow properties AutoAdjustBufferSize, DefaultBufferMaxRows, and DefaultBufferSize and OLE DB Destination properties Rows per batch and Maximum insert commit size.

There are several blogs explaining what these values are, but not many examples of how one would go about finding optimal values. As with most performance tuning recommendations in SQL Server, the answer is, “it depends.” But I hope to at least get you started down the right path. Let’s begin.

Environment Setup

To simulate a realistic and slightly constrained environment, I set up a Windows 10 VM with 2 cores and 8 GB of memory. I am running SQL Server 2019 CU 18 and Visual Studio Enterprise 2019. The SQL Server is set up using defaults, and everything is running on the C: Drive, including the database data and log files. This setup is intended to highlight performance changes under less-than-ideal conditions without exceeding my $150 dev subscription budget.

Creating Source and Destination Databases

Using the following scripts, I created the source and destination databases, then created a Customer table with 10 million rows of random data from master.dbo.spt_values. Between SSIS package executions, I would drop and recreate the destination database and table.

USE master;

/*Create source and destination databases*/

CREATE DATABASE SSISTestSource;

ALTER DATABASE SSISTestSource SET RECOVERY SIMPLE WITH NO_WAIT;

CREATE DATABASE SSISTestDestination;

ALTER DATABASE SSISTestDestination SET RECOVERY SIMPLE WITH NO_WAIT;

/*Create source table*/

USE SSISTestSource;

CREATE TABLE dbo.CustomerSource

(

CustomerSourceId int IDENTITY(1, 1),

FirstName nvarchar(50) NOT NULL,

LastName nvarchar(50) NOT NULL,

CONSTRAINT CustomerSource_CustomerSourceId PRIMARY KEY CLUSTERED (CustomerSourceId ASC),

);

/*Insert 10 million rows in batches of 100,000. Credit to Jonathan Kehayias for this script*/

INSERT dbo.CustomerSource

(

FirstName,

LastName

)

SELECT TOP (100000)

a.name,

b.name

FROM master.dbo.spt_values AS a

CROSS JOIN master.dbo.spt_values AS b

WHERE a.name IS NOT NULL

AND b.name IS NOT NULL

ORDER BY NEWID();

GO 100

/*Note: This finished in 58 seconds*/

/*CREATE DESTINATION TABLE*/

USE SSISTestDestination;

CREATE TABLE dbo.CustomerDestination

(

CustomerDestinationId int IDENTITY(1, 1),

FirstName nvarchar(50) NOT NULL,

LastName nvarchar(50) NOT NULL,

CONSTRAINT CustomerDestination_CustomerDestinationId PRIMARY KEY CLUSTERED (CustomerDestinationId ASC),

);

Creating the SSIS Package

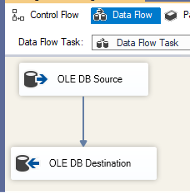

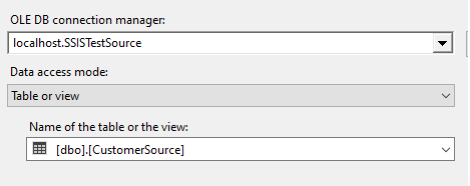

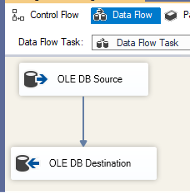

Next, I created an SSIS package with a Data Flow Task to transfer data from dbo.CustomerSource to dbo.CustomerDestination. This involves adding an OLE DB Source and an OLE DB Destination in the Data Flow, connecting them, and configuring the connection managers appropriately.

If you’re reading this you likely already know the basics of creating an SSIS package, so I am going to move on to the good part.

Identifying Performance Bottlenecks

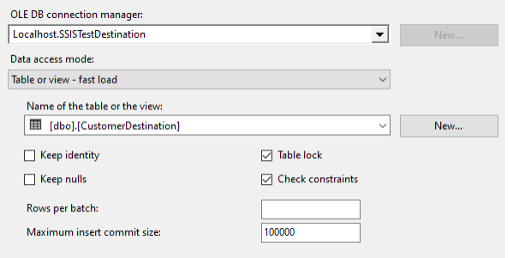

My data flow consists of an OLE DB Source and OLE DB Destination. I used the Table or view access mode and left everything else at their default values.

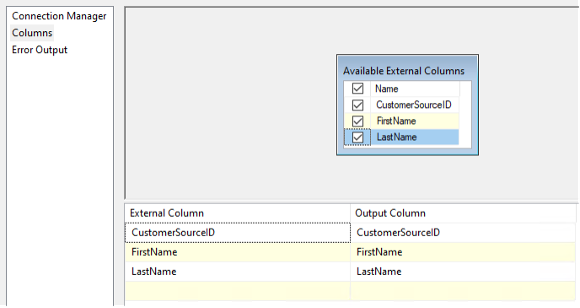

The above screenshots probably look familiar to you, but what is often overlooked is the Data Flow Task properties themselves. An important distinction is that the data flow task properties apply to the entire data flow while you can have multiple OLE DB Destinations that can be independently configured inside a single data flow.

Exploring Data Flow Task Properties

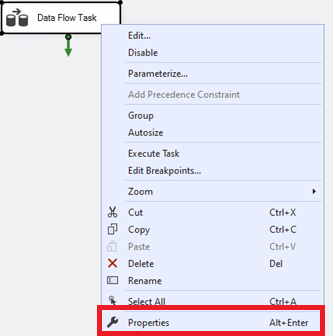

If you are having trouble finding these values, from the control flow, right-click on your Data Flow Task and select properties.

Let the Tests Begin

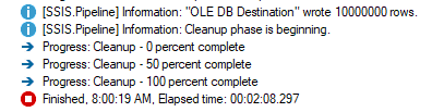

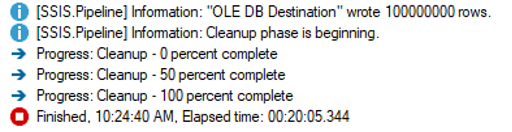

For the first test, I executed the package using all defaults with the following result:

Just over two minutes to load 10 million rows. That doesn’t sound so bad, but in reality, I would tell my client that if the two databases exist on the same server, there’s no need to pull the data into SSIS and push it back out using a Data Flow Task. So, what would happen if they just used a SQL script to transfer the rows instead?

Comparing SSIS with T-SQL Approach

USE SSISTestDestination;

INSERT INTO dbo.CustomerDestination (FirstName, LastName)

SELECT FirstName, LastName

FROM SSISTestSource.dbo.CustomerSource

ORDER BY CustomerSourceID;

/*SQL Server Execution Times:

CPU time = 17687 ms, elapsed time = 78954 ms.*/

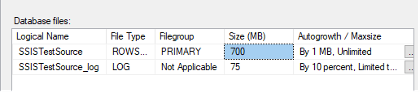

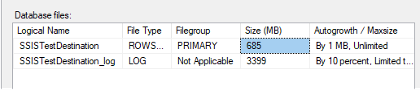

Ahh, one minute and eight seconds to let SQL Server do the heavy lifting. It’s important to note that we inserted these 10 million rows in a single transaction, which also causes the transaction log to fill up. On the source table, we loaded in batches of 100,000 and the transaction log only grew to 75 MB, whereas loading the entire table in one batch made the log file grow to 3399 MB. If you go this route you will need to make sure your log drive has enough capacity.

If this was my client’s package, I’d advise them to either put this directly in a SQL Agent Job as a T-SQL command. If SSIS was absolutely necessary, I’d recommend putting it into an Execute SQL Task. However, this is a demo on tuning Data Flow Tasks, so my goal now is to change these default settings to get as close as possible to the 78 second benchmark.

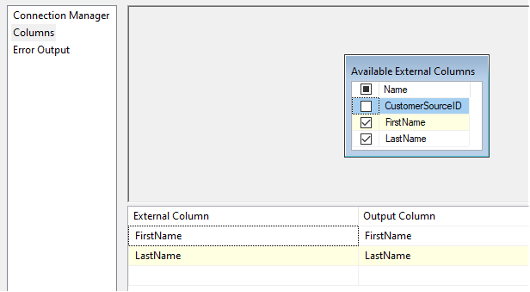

First, looking at the package execution, I saw a warning that the CustomerSourceId column was not used in the dataflow.

[SSIS.Pipeline] Warning: The output column "CustomerSourceId" (36) on output "OLE DB Source Output" (35) and component "OLE DB Source" (24) is not subsequently used in the Data Flow task. Removing this unused output column can increase Data Flow task performance.

There’s another data flow property called RunInOptimizedMode, which “improves performance by removing unused columns, outputs, and components from the data flow,” but best practice is to remove unused columns at the source. In my example, this can be done easily be deselecting the column in the OLE DB Source.

Applying Optimization Techniques

Tuning Data Flow Task Properties

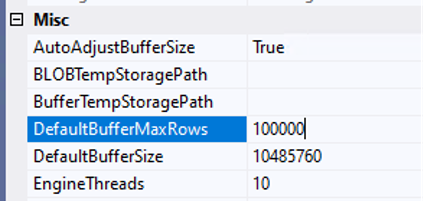

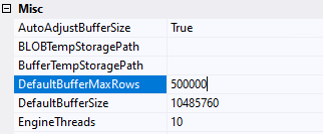

Next, I look at the Data Flow Task properties. The default setting for DefaultBufferMaxRows is 10,000 and the DefaultBufferSize is 10MB. SSIS will load 10,000 rows into its buffer, or 10MB, whichever comes first. Our package is loading two columns, each of data type nvarchar (50). The size of nvarchar is 2* n bytes, so if we take 2 columns * (50 * 2) * 10000 rows we end up with an estimate of 2,000,000 bytes per row, or ~2MB.

As a reminder, SQL Server is using 4GB of memory, which leaves 4GB for the OS and Visual Studio and whatever other background processes are running. While my SSIS package won’t be able to use the full 4GB, it should certainly be able to allocate more than 2 MB.

If you look back to the initial customer table load which took 58 seconds, you’ll see that it was done in batches of 100,000 rows. We can update the DefaultBufferMaxRows to 100,000, but we will hit our DefaultBufferSize cap. You could either increase that value or set AutoAdjustBufferSize to True, which will automatically calculate the buffer size and override the DefaultBufferSize value. I’ll opt for the latter. That way, as I make adjustments, I don’t have to keep recalculating the DefaultBufferSize.

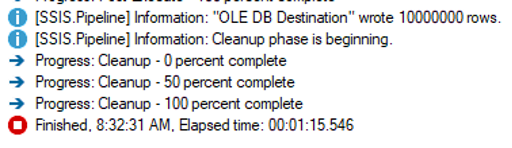

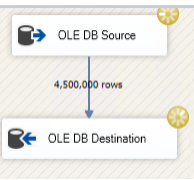

To recap: I removed CustomerSourceId from the dataflow source, set AutoAdjustBufferSize to True, and DefaultBufferMaxRows to 100,000. I ran the package again, and shazam!

Serious progress has been made.

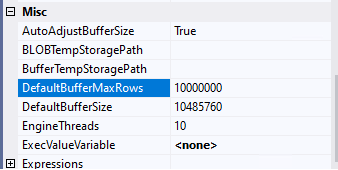

Whether or not the 3 second difference between the SSIS package and the SQL query is statistically significant, the 47 seconds between using defaults and tuning the Data Flow task certainly is. So that begs the question: if setting DefaultBufferMaxRows to 100,000 is good, that means setting it to 10,000,000 is 100x better, right? Wrong.

*> Error: A buffer failed while allocating 2147483448 bytes.

Error: The system reports 56 percent memory load. There are 8589463552 bytes of physical memory with 3698368512 bytes free. There are 4294836224 bytes of virtual memory with 4031754240 bytes free. The paging file has 9931640832 bytes with 3992477696 bytes free.

Information: Buffer manager allocated 0 megabyte(s) in 0 physical buffer(s).

[SSIS.Pipeline] Error: The Data Flow task failed to create a buffer to call PrimeOutput for output "OLE DB Source" (24) on component "OLE DB Source Output" (35). This error usually occurs due to an out-of-memory condition.*

At some point you’re going to run out of resources, and on my test system, 10 million is way over.

So, what about 500,000 rows?

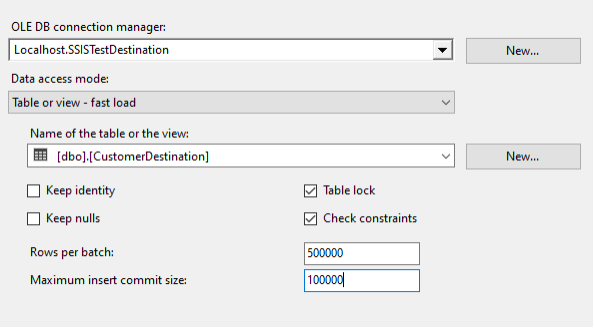

Advanced Settings in OLE DB Destination

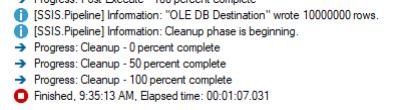

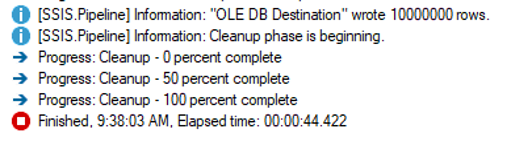

Things keep getting better! But wait, there’s more. We still haven’t tuned the OLE DB destination. The default Maximum insert commit size of 2147483647 will attempt to load all rows in a single commit. But what if we told the OLE DB destination that it would be receiving records in batches of 500,000 but we only want to commit 100,000 at a time?

Wow, 44 seconds—a full half minute faster than the SQL Script! How is that possible? I would guess it has to do with either the ORDER BY, or, more likely, the fact it had to commit the entire 10,000,000 rows in a single transaction. Testing could be done to fine tune the SQL statement, but you’re here for SSIS so that test will have to wait for another day.

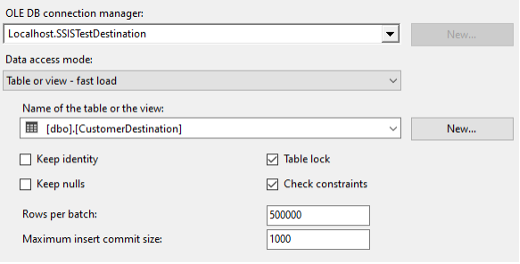

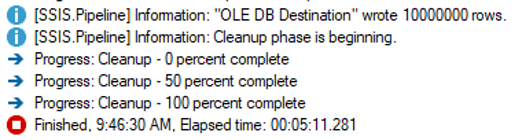

So now that we have the SSIS package running at epic speeds, is it possible to be wrong? Well, let’s see the result if we accidentally set the Maximum insert commit size to 1,000 instead of 100,000.

Whoa! Over 5 minutes for the same record set. As you can see, you can definitely get this wrong, so it’s important to record your benchmarks before making changes so you have something to compare to. “It seems faster” or “everything is slow” isn’t super helpful when performance tuning.

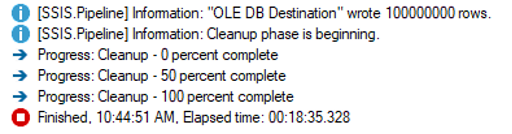

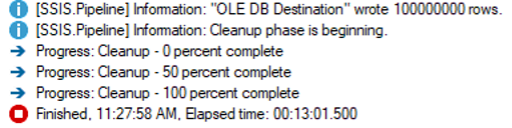

The final test was to see what would happen on a large dataset. I ran the same query to load dbo.CustomerSource table 900 more times, giving us a total of 100 million records. I reverted the SSIS package to use all defaults and got the following result.

This is to be expected – 10x more rows took 10x more time. Then I set everything back to the “optimal” values from the 10 million row example, and here is the outcome.

I was expecting better than a 90 second improvement, so I checked the execution log and there were hundreds of errors like the following that didn’t appear in the 10 million row example:

*> Information: The buffer manager failed a memory allocation call for

108000000 bytes, but was unable to swap out any buffers to relieve memory pressure. 14 buffers were considered and 14 were locked. Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Information: Buffer manager allocated 309 megabyte(s) in 3 physical buffer(s).*

So, I reduced it to batches of 250,000.

This is more like what I was expecting. However, I still received a handful of out memory allocation errors.

*> Information: The buffer manager failed a memory allocation call for

54000000 bytes, but was unable to swap out any buffers to relieve memory pressure. 14 buffers were considered and 14 were locked. Either not enough memory is available to the pipeline because not enough are installed, other processes were using it, or too many buffers are locked.

Information: Buffer manager allocated 52 megabyte(s) in 1 physical buffer(s).*

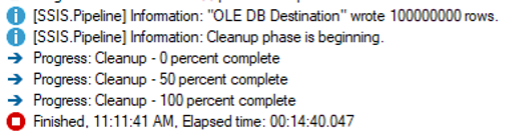

Still scratching my head, I wondered what would happen if I didn’t specify a batch size in the OLE DB destination and let SSIS handle it?

Successfully finished 9 minutes faster than using defaults and no error messages. However, this is more than 10x longer than the fastest 10 million row example, so there’s more adjustments that could be made. I’ll save those for another day.

Key Tips and Best Practices

Some takeaways from this demo are:

- Serious performance gains can be made by adjusting the default SSIS package settings

- Your settings may need to change as your data changes

- Adjusting the defaults can make things much worse, so be sure to grab benchmark statistics on your packages before making changes

Some other considerations for these settings:

- How much memory is available for SSIS? Is it on the same server as the SQL Data? Are there other applications running like SSRS/SSAS?

- Do you have one SSIS package running at a time, or do you have multiple SQL agent jobs executing multiple SSIS packages simultaneously?

- Does your SSIS package have multiple data flows running in parallel?

- Does your Data Flow Task have multiple OLE DB sources or destinations?

- Could I be performing premature optimization?

Keeping in mind that performance is a feature, not a test!

Additional Testing

Some other tests you may want to try:

- Different rows per batch settings

- Different commit sizes

- Set the DefaultBufferSize to a value you’ve calculated for the size of your rows or to cap memory used by the dataflow, and disable AutoAdjustBufferSize

- Enable logging at the package or data flow level

- Use perfmon to monitor SSIS resource usage

- Try using SSIS Scale Out and compare the results

Too much information? Still don’t know what to do or where to start? Try this:

- Go into SQL Agent and record how long the offending package normally takes.

- In Data Flow Task properties enable AutoAdjustBufferSize and set DefaultBufferMaxRows to 50000.

- In the OLE DB Destination set Maximum insert commit size to 100000.

- Deploy the package and execute it, recording how long it takes to run.

- Rollback your changes if performance got significantly worse. Adjust as necessary.

Additional Resources

Microsoft Documentation:

Want to improve your SSIS performance? We can help. Get in touch with an expert.

Boosting SSIS Data Flow Task Performance FAQs

1. How to improve the performance of data flow tasks in SSIS?

To improve performance, optimize Data Flow Task properties like AutoAdjustBufferSize, DefaultBufferMaxRows, and DefaultBufferSize. Additionally, tune the OLE DB Destination properties such as Rows per batch and Maximum insert commit size.

2. How to make SSIS faster?

Making SSIS faster involves optimizing queries, ensuring proper indexing, configuring buffer settings, and adjusting batch sizes. Also, consider using parallel processing and fine-tuning package designs to minimize data movement and transformations.

3. Which transformation is best performing in SSIS?

The best-performing transformation in SSIS often depends on the specific use case, but generally, transformations that operate in memory, like the Lookup transformation with caching enabled, tend to perform well. Avoid blocking transformations when possible, as they can slow down the data flow.

4. What is the primary purpose of the Data Flow Task in SSIS?

The primary purpose of the Data Flow Task in SSIS is to extract, transform, and load (ETL) data between different sources and destinations. It facilitates data movement and transformation within a package, enabling complex data integration workflows.

5. Can using a SQL script for data transfer be faster than using SSIS?

Yes, using a SQL script for data transfer can sometimes be faster, especially when transferring large volumes of data between tables on the same server. However, SSIS offers more flexibility and control over the ETL process, making it suitable for complex data integration tasks.

6. What should I consider when optimizing SSIS packages?

When optimizing SSIS packages, consider the available memory, server setup, and specific data requirements. Also, evaluate how many SSIS packages are running simultaneously and whether multiple data flows or OLE DB sources and destinations are involved.