In This Blog

- Granular Control and Visibility with Multi-stage Pipelines

- Azure DevOps Multi-stage Pipelines

- The Right Tools for Consistent Resource Deployment

- Tools for CRUD (Create, Read, Update, Delete) Operations

- Declarative Database Deployments with SqlPackage

- SqlPackage Tool

- Putting It All Together

- Frequently Asked Questions

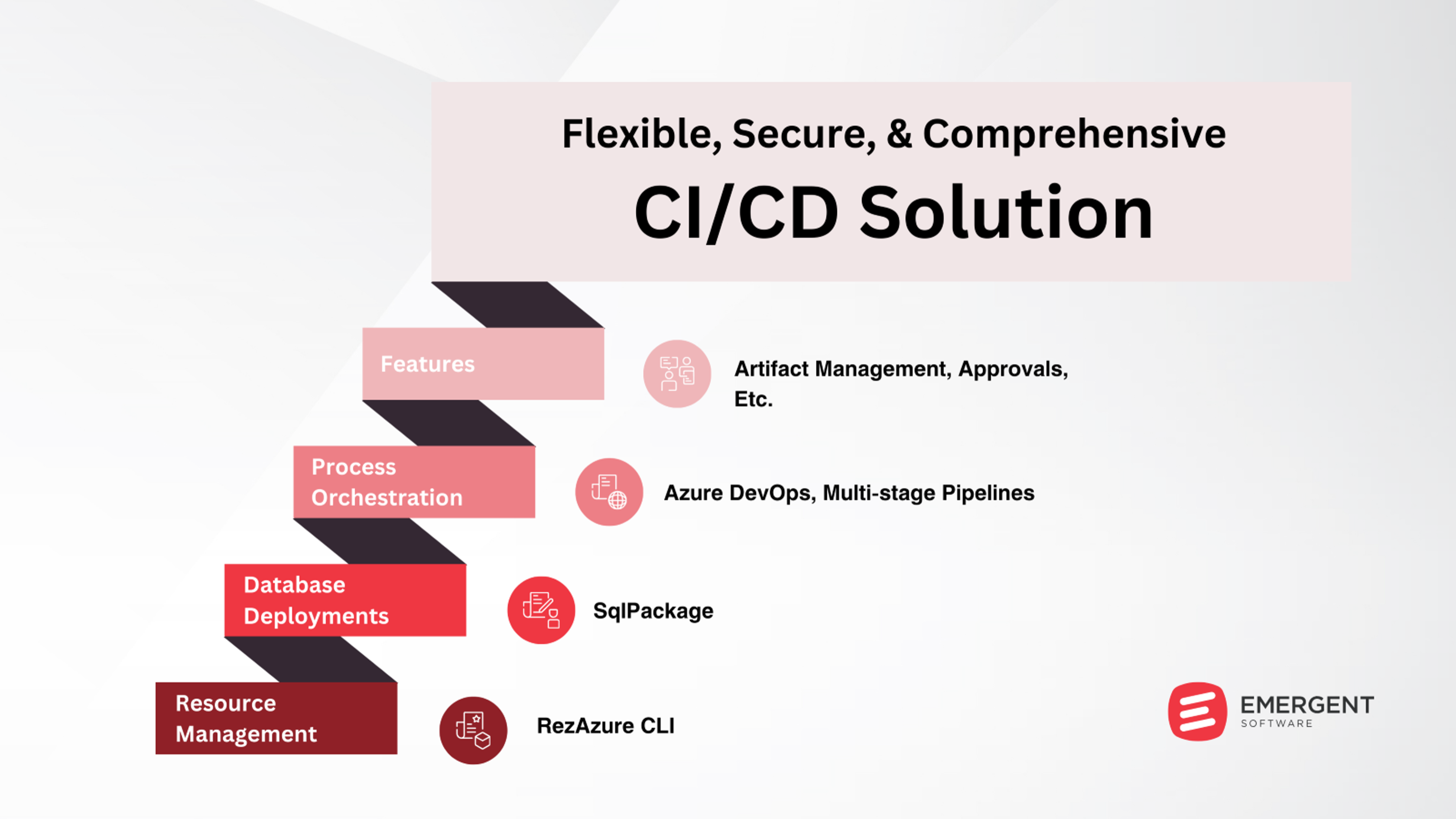

Implementing a robust continuous delivery pipeline is crucial for organizations looking to deliver updates to their Azure SQL databases swiftly and reliably. By combining Azure CLI for resource management, SqlPackage for database deployments, and Azure DevOps multi-stage pipelines for process orchestration, along with features like approvals and artifact management, you can create a flexible, secure, and comprehensive CI/CD solution.

This approach leverages idempotent operations (meaning an operation can be repeated without changing the result) and "everything-as-code" (IaC) principles, ensuring your database deployments are repeatable and consistent across development, QA, and production environments. With idempotent resource deployments, you can run the same IaC templates or scripts multiple times without worrying about unintended changes or configuration drift. And by capturing all of your database definitions, migration scripts, and deployment logic as version-controlled code, you establish a single source of truth that drives consistent deployments across all stages of your pipeline.

You may also like: SQL Performance Tuning Best Practices

Granular Control and Visibility with Multi-stage Pipelines

Azure DevOps Multi-stage Pipelines

At the heart of this continuous delivery approach lies Azure DevOps multi-stage pipelines. These pipelines consist of stages, jobs, steps, and tasks, allowing you to define your end-to-end deployment process with a high degree of granularity and control.

By organizing your pipeline into logical stages like development, QA, and production, each with its own set of jobs, you can manage the flow of changes through your environments effectively. Associating each stage with a specific environment allows you to configure stage-specific variables and resources, as well as introduce manual approvals and quality gates between stages.

For example, consider a pipeline for a mission-critical customer-facing application with strict quality and compliance requirements. You could set up your pipeline with:

- A development stage that automatically triggers on each merge to the main branch. This stage would build the latest database changes, run unit tests, and deploy to a development database for integration testing.

- A QA stage that deploys the successful development build to a staging environment after manual approval from the QA team lead. This stage would run a comprehensive suite of automated integration and regression tests, as well as manual exploratory tests, to ensure the database changes meet the required quality standard.

- A pre-production stage that deploys the validated QA build to a pre-production environment, mimicking the production setup. This stage would require approval from the application owners and stakeholders, and serve as a final dress rehearsal before the production deployment.

- A production stage that requires additional approvals from the change advisory board and deploys during a designated low-traffic maintenance window. This stage would enforce stringent rollback and recovery procedures to mitigate any potential impact to end-users.

By defining these granular stages with clear entry and exit criteria, responsible parties, and quality gates, you establish a robust and transparent deployment process that balances agility with reliability. Automated builds and tests in the development stage enable fast iterations and feedback loops, while manual approvals and progressive quality checks in the QA and pre-production stages ensure that only verified changes are promoted to production. And with the ability to configure stage-specific variables, such as database connection strings or feature flags, you can easily manage environmental differences across the pipeline.

The Right Tools for Consistent Resource Deployment

Tools for CRUD (Create, Read, Update, Delete) Operations

When it comes to deploying and managing the Azure resources that host your SQL databases, such as servers, firewall rules, and failover groups, consistency and repeatability are paramount. That's where tools like Azure CLI, Azure PowerShell, and Infrastructure-as-Code solutions come into play.

Azure CLI is a versatile cross-platform command-line tool for managing virtually every aspect of your Azure environment. Its support for idempotent operations makes it an ideal choice for scripting complex deployments in a consistent and repeatable manner. You can use Azure CLI to create and configure your Azure SQL servers and databases, and rest assured that running the same commands multiple times will only apply the necessary changes.

For instance, let's say you want to automate the provisioning of a new Azure SQL server and database for each feature branch in your development environment. With Azure CLI, you could define a parameterized script that takes in variables like the branch name, server admin credentials, and database tier:

```bash

# Create Azure SQL server

az sql server create \

--name "sqlserver-$BRANCH_NAME" \

--resource-group "$RESOURCE_GROUP" \

--location "$LOCATION" \

--admin-user "$SQL_ADMIN_USER" \

--admin-password "$SQL_ADMIN_PASSWORD"

# Create Azure SQL database

az sql db create \

--name "sqldb-$BRANCH_NAME" \

--server "sqlserver-$BRANCH_NAME" \

--resource-group "$RESOURCE_GROUP" \

--service-objective "$SQL_DATABASE_TIER"

```

By embedding this script in your Azure DevOps pipeline, you can automatically spin up isolated database environments for each feature branch, enabling parallel development and testing streams. And thanks to the idempotent nature of the az sql server create and az sql db create commands, you can safely re-run the script multiple times for the same branch without risking duplicate or conflicting resources.

Azure PowerShell provides a similar set of capabilities but is built on PowerShell cmdlets and modules. It may be the preferred choice for teams already invested in PowerShell-based automation. Both Azure CLI and PowerShell can be seamlessly invoked from Azure DevOps pipeline tasks, making it easy to integrate them into your end-to-end deployment process.

For teams looking to adopt an Infrastructure-as-Code (IaC) approach, third-party tools like Terraform and Ansible are popular options. These tools allow you to define your Azure resource configurations in declarative templates that can be version-controlled alongside your application code.

For example, here's how you might define an Azure SQL server and database using Terraform HCL syntax:

```hcl

resource "azurerm_sql_server" "example" {

name = "sqlserver-${var.branch_name}"

resource_group_name = var.resource_group

location = var.location

version = "12.0"

administrator_login = var.sql_admin_user

administrator_login_password = var.sql_admin_password

}

resource "azurerm_sql_database" "example" {

name = "sqldb-${var.branch_name}"

resource_group_name = var.resource_group

location = var.location

server_name = azurerm_sql_server.example.name

edition = var.sql_database_tier

}

```

With the Terraform Azure provider, you can manage the full lifecycle of your Azure SQL resources using a declarative, idempotent approach. The azurerm_sql_server and azurerm_sql_database resources ensure that your target server and database are created and configured exactly as specified, every time you run terraform apply.

Terraform templates can be integrated into Azure DevOps pipelines using the Terraform extensions or Azure CLI tasks for authentication and state management. This allows you to bring the benefits of IaC, such as version control, code review, and repeatable deployments, to your database resource provisioning process.

Declarative Database Deployments with SqlPackage

SqlPackage Tool

When it comes to deploying changes to your Azure SQL database schemas and objects, the SqlPackage utility is a powerful tool that enables state-based, declarative deployments. By generating a DACPAC (Data-tier Application Package) file from your SQL project source code, SqlPackage can compare the desired state of your database against its current state, and apply only the necessary changes to bring the database up to date.

This declarative approach ensures that your database deployments are idempotent and consistent across environments. Whether you're deploying to a fresh database or updating an existing one, SqlPackage will determine and apply the required changes, reducing the risk of configuration drift or failed deployments.

For example, suppose you have a SQL project that defines your database schema, along with a set of pre- and post-deployment scripts to handle data migrations and other tasks. To deploy this project to a target Azure SQL database using SqlPackage, you would first build the project to generate a DACPAC file:

```bash

SqlPackage /Action:Build /SourceFile:MyDatabase.sqlproj /TargetFile:MyDatabase.dacpac

```

Then, you can use the SqlPackage Publish action to deploy the DACPAC to your target database:

```bash

SqlPackage /Action:Publish /SourceFile:MyDatabase.dacpac /TargetServerName:sqlserver-prod.database.windows.net /TargetDatabaseName:MyDatabase /TargetUser:sqladmin /TargetPassword:$SecurePassword

```

The Publish action will compare the contents of the DACPAC with the current state of the target database, generate a deployment script containing the necessary changes, and execute that script to update the database. If you run the same Publish command multiple times, SqlPackage will intelligently detect any changes in the DACPAC and apply only the required modifications to the database, ensuring idempotent and repeatable deployments.

However, it's important to note that SqlPackage is primarily focused on deploying database schema and object changes, and has limited capabilities when it comes to creating or configuring the Azure SQL server and database resources themselves. For those tasks, it's best to leverage Azure CLI, PowerShell, or your preferred IaC tool to ensure your target environments are provisioned correctly before running SqlPackage.

Putting It All Together

By combining the power of Azure DevOps multi-stage pipelines, Azure CLI or IaC tools for resource deployment, and SqlPackage for database updates, all wrapped in an "everything-as-code" methodology, you can create a robust and flexible continuous delivery process for your Azure SQL databases.

The granular control provided by multi-stage pipelines allows you to define quality gates and approvals between your environments, while the idempotent nature of your resource and database deployments ensures consistency and repeatability across the pipeline. With all of your configuration and deployment logic captured as code and templates, you can version-control your entire database delivery process alongside your application code.

Each stage in this pipeline serves a specific purpose, from fast iterations and integration testing in development, to progressive quality checks and verifications in QA and pre-production, to the cautious and tightly controlled rollout to production. And with Azure CLI or Terraform ensuring consistent resource provisioning, and SqlPackage handling idempotent database deployments, the entire process is reliable, repeatable, and fully automated.

The end result is a streamlined and robust database deployment process that empowers your team to deliver value to your users faster, and with greater confidence. Your deployments become repeatable and consistent across environments, reducing the risk of manual errors or configuration drift. With the full power and flexibility of Azure DevOps at your disposal, you can continuously enhance and optimize your pipeline as your delivery needs evolve.

Contact us today to discover how our services can help your business succeed. Our expert team provides tailored solutions to optimize your technology infrastructure, enhance productivity, and drive growth.

Frequently Asked Questions

What do you mean by multi-stage pipeline?

A multi-stage pipeline is an Azure DevOps pipeline that consists of multiple stages, each representing a distinct phase in the deployment process, such as development, QA, pre-production, and production. This structure allows teams to enforce quality gates, automate approvals, and gain visibility into the flow of changes from code to production.

What is the stage in a release pipeline?

In an Azure DevOps release pipeline, a stage represents a logical boundary in the deployment process, typically corresponding to a specific environment or a set of related tasks, such as building, testing, or deploying the application. Stages help organize and control the flow of releases, ensuring each step meets predefined criteria before moving forward.

Does Azure DevOps depend on the previous stage?

Yes, Azure DevOps pipelines can be configured with stage dependencies, where a stage will only run if the previous stage has completed successfully, allowing for a sequential and gated deployment process. This ensures that issues are caught early and only validated code progresses through the pipeline.